Tesla Robotaxi: Unveil Date and Everything Else You Want to Know

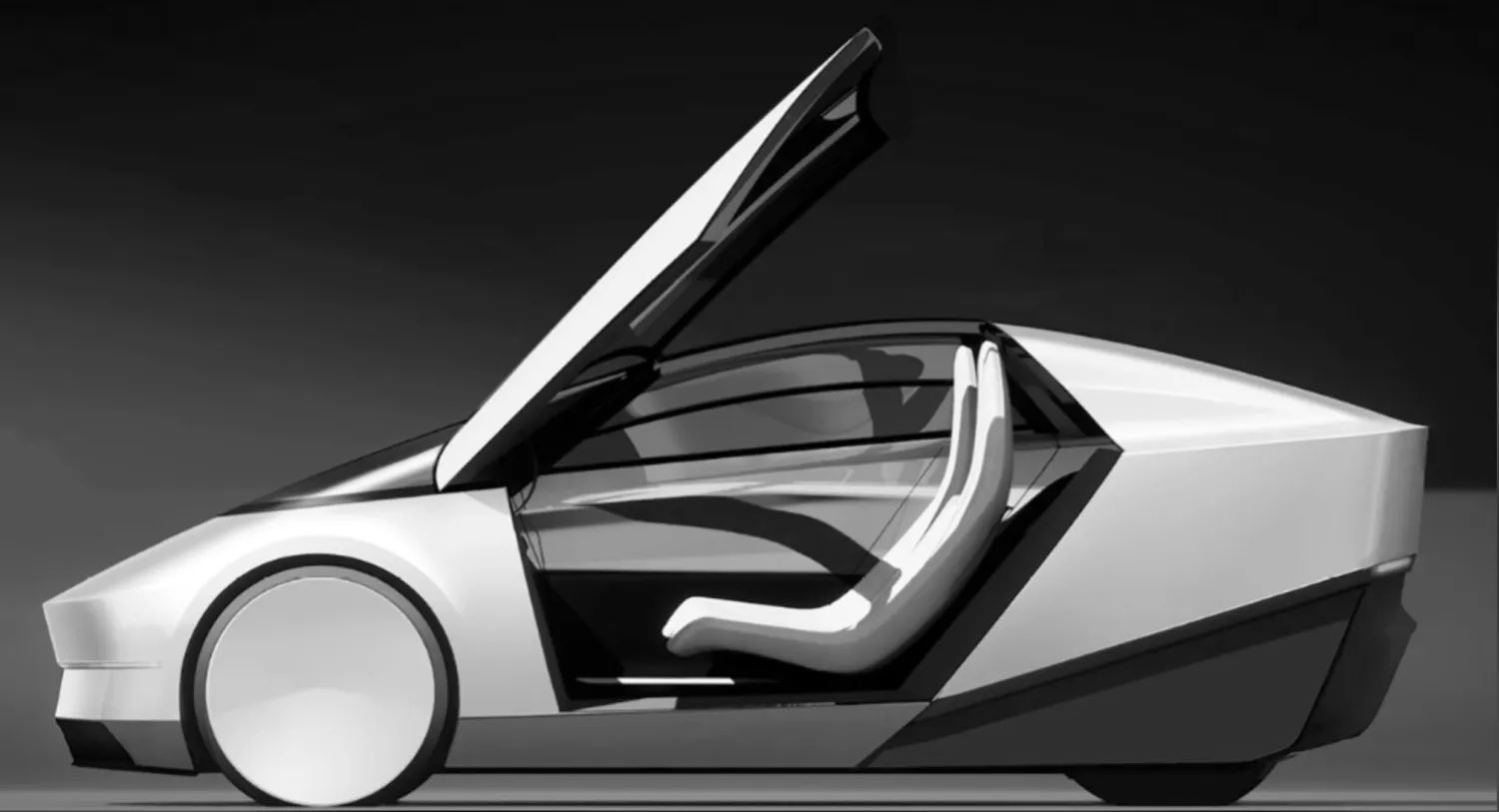

We have been hearing about Tesla’s Robotaxi concept for several years, but it seems like we may finally be getting close to this vehicle becoming a reality. Here is everything we know about the Robotaxi.

Official Reveal

Yesterday, Musk officially announced on X that Tesla would unveil the Tesla Robotaxi on August 8th, 2024. Tesla last unveiled a new vehicle back in November 2019 when they showed off the Cybertruck for the first time. Before that, they unveiled the Roadster 2.0 and the Tesla Semi at the same event in 2017, so these certainly special times that only come across once every few years.

While it's always possible that Tesla may have to move the Robotaxi's unveil date, it's exciting to think that Tesla may be just four months from unveiling this next-gen vehicle.

Robotaxi and Next-gen Vehicle

Another piece of information came out about the Robotaxi yesterday when Musk reply to the post by Sawyer Merritt. Sawyer posted that Tesla's upcoming "$25k" vehicle and the Robotaxi would not only be based on the same platform, but that the Robotaxi would essentially be the same vehicle without a steering wheel. Musk replied to the post with a simple "looking" emoji.

While it's not surprising that two of Tesla's smaller upcoming vehicles are going to be built on the same platform, it's a little more interesting that Musk chose to reply with that emoji when the post talks about the Robotaxi being the "Model 2" without a steering wheel. This leads to the possibility of Tesla not only showing off the Robotaxi at the August 8th event, but also it's upcoming next-gen car.

Production Date

Back during Tesla's Q1 2022 earnings call, Musk talked a little about the timeline for Tesla's Robotaxi, stating that they plan to announce the vehicle in 2023 and begin mass production in 2024.

Given that Tesla was originally aiming for a 2023 unveil, a late 2024 date appears realistic. However, now it appears that the Robotaxi and the next-gen vehicle will share a lot in common, meaning that a production date for the Robotaxi can be similar to the next-gen vehicle, which is currently slated to begin in "late 2025".

The difficulty in releasing an autonomous taxi, as the Robotaxi is meant to be, is the self-driving aspect. While Tesla has made great strides with FSD v12, the first version to come out of "beta," it's still a level-2 system that requires active driver supervision. A fully autonomous vehicle is still a big leap from where Tesla's FSD is right now, but as we saw with the jump from FSD v11 to v12, a lot can change in the next 18 to 24 months.

While we expect Tesla to remain focused on bringing its cheaper, next-gen vehicle to market ahead of potential competitors, the Robotaxi's production date can continue to shift in line with Tesla's progress on FSD.

?

— Elon Musk (@elonmusk) April 5, 2024

Master Plan Part Deux

The history of Tesla’s Robotaxi starts with CEO Elon Musk's Master Plan Part Deux, published in 2016.

At the time the concept was touted as normal Teslas with full self-driving (FSD) capability.

Once Tesla achieved Full Self-Driving, they would create a “Tesla Network” taxi service that would make use of both Tesla-owned vehicles and customer cars that would be hired out when not in use.

In April 2022, however, at the inauguration of Tesla’s new factory in Austin, Texas, Musk made headlines by announcing that the company would be working on a dedicated Robotaxi vehicle that would be “quite futuristic-looking”.

A Variety of Robotaxis

Once we get to a world of "robotaxis," it makes sense to continue evolving the interior of the vehicle to suit customer needs such as adding face-to-face seating, big sliding doors providing easy access, 4-wheel steering, easier cleaning, etc.

Tesla could even create a variety of Robotaxis that help meet specific needs. For example, Tesla could offer a vehicle that is better suited for resting, which could let you sleep on the way to your destination.

Another vehicle could be similar to a home office, offering multiple monitors and accessories that let you begin working as soon as you step inside the vehicle. Features such as these could bring huge quality of life improvements for some; giving people an hour or more back in their day.

The variety of Robotaxis doesn't need to end there. There could be other vehicles that are made specifically for entertainment such as watching a movie, or others that allow you to relax and converse with friends, much like you'd expect in a limousine.

Lowest Cost Per Mile

During Tesla's Q1 2022 financial results call, Musk stated that its robotaxi would be focused on cost per mile, and would be highly optimized for autonomy - essentially confirming that it will not include a steering wheel.

“There are a number of other innovations around it that I think are quite exciting, but it is fundamentally optimized to achieve the lowest fully considered cost per mile or km when counting everything”, he said.

During the call, Tesla acknowledged that its vehicles are largely inaccessible for many people given their high cost and he sees the introduction of Robotaxis as a way of providing customers with “by far the lowest cost-per-mile of transport that they’ve ever experienced. The CEO believes that the vehicle is going to result in a cost per mile cheaper than a subsidized bus ticket. If Tesla can achieve this, it could drastically change the entire automotive industry and redefine car ownership. Is Tesla's future still in selling vehicles or providing a robotaxi service?

FSD Sensor Suite

Tesla hasn't revealed anything about the sensor suite that they're considering for the robotaxi, but given all of their work in vision and progress in FSD, it's expected to be the same or similar to what is available today, potentially with additional cameras or faster processing.

However, back in 2022, Musk gave this warning: “With respect to full self-driving, of any technology development I’ve been involved in, I’ve never really seen more false dawns or where it seems like we’re going to break through, but we don’t, as I’ve seen in full self-driving,” said Musk. “And ultimately what it comes down to is that to sell full self-driving, you actually have to solve real-world artificial intelligence, which nobody has solved. The whole road system is made for biological neural nets and eyes. And so actually, when you think about it, in order to solve driving, we have to solve neural nets and cameras to a degree of capability that is on par with, or really exceeds humans. And I think we will achieve that this year.”

With the Robotaxi unveil now approaching, it may not be long before we find out more details about Tesla's plan for the future and its truly autonomous vehicles.

![Tesla’s Missing Voice: Why a PR Team Matters More Than Ever [Opinion]](https://www.notateslaapp.com/img/containers/article_images/multiple-models/group_93.jpg/4e1056961f0480c7b9eff43dd2ec288e/group_93.jpg)