Spy Photo Hints at How Tesla's Front Bumper Camera Will Work

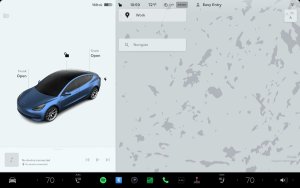

A recent Cybertruck photo revealed that Tesla will let drivers access the video feed from the vehicle's front bumper camera. The picture surfaced on Reddit that shows the center screen of the Cybertruck displaying a view from what can only be a front bumper camera.

Enhancing Parking and Navigation

Not only does the image on Reddit confirm that Tesla is introducing a front bumper camera on the Cybertruck, but they already have the software developed to access the new camera feed. The new camera could greatly enhance parking and navigation. This front view adds a new dimension to vehicle awareness, particularly in tight spaces, complex urban environments, or when pulling as far forward as possible in the garage.

Front Bumper Camera Software

The partial image of the Cybertruck's screen shows the front bumper camera streamed on the vehicle's display. The front camera appears to be displayed using the vehicle's existing camera app, which is to display the backup camera.

Our first glimpse at the front bumper camera UI and previous comments by Elon Musk may reveal just how the updated Camera app will work.

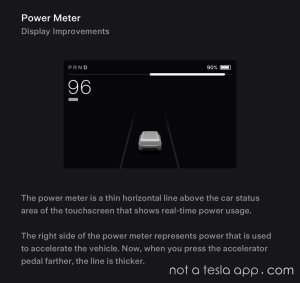

Today, the camera app displays the reverse camera on top, and if enabled, the fender, or repeater cameras are shown underneath.

However, this new photo appears to illustrate something much more helpful. We can see the front bumper camera on top and smaller, thumbnail-like camera views underneath it. These narrower thumbnails lead us to believe that Tesla will have three thumbnails underneath the main camera instead of two. You'll have access to the front, reverse, left, and right fender views.

Looking further back, we may just see where Tesla is headed with this new feature.

In 2020, Tesla added the ability to display the left/right fender camera views when using the reverse camera.

Right after the side repeater cameras were made available, a Tesla owner asked Musk whether it would be possible to enlarge the side-view cameras when backing up. In his unmistakable style, Elon replied, "Yes, coming soon."

Based on Musk's comment and the UI in the spy photo, it looks like Tesla is doing just that. You will likely be able to tap on any of the camera views in the app to enlarge them, allowing you to seamlessly switch between the front, rear, and repeater camera views.

This layout is similar to what Tesla already provides in their Sentry Mode viewer, which allows you to tap on various camera views to enlarge them on the screen.

Tesla may even go one step further and automatically switch between the front and rear-facing cameras depending on whether you're moving forward or backward.

Bird's Eye View

Tesla's Camera app is starting to look like the Multi-Camera View introduced in the Tesla app recently. However, it falls short of a true 360-degree image or "bird's eye view" that Tesla owners have been requesting.

Addressing the Blind Spot Dilemma

Adding a front bumper camera could significantly reduce the blind spot issue that became more apparent when Tesla removed the sensors from the front bumper. Tesla's decision to remove the ultrasonic sensors (USS) in late 2022 sparked debates and left several questions unanswered. Many were concerned about the effectiveness of the Tesla Vision system in replacing the USS.

As soon as the new vehicles were in the wild, Tesla owners were keen on testing the accuracy of Tesla Vision. It became clear that there was a blind spot directly in front of the bumper. This new camera location would correct that issue, though it does not provide the 360º view that some owners and critics call for. The Reddit image does not indicate that this has been implemented, leaving room for speculation and possibly future enhancements.

The Model 3 Highland Connection

In connection with the Cybertruck's front bumper camera, there is growing excitement regarding the Tesla Model 3 Highland refresh. The newly designed bumper shape and smoother front end, combined with redesigned headlights, provide opportunities for more technology implementation. Trusted sources have confirmed that a front bumper camera will indeed be part of the Highland update, reinforcing the connection between these two vehicles and the company's forward-thinking approach.

While the Reddit revelation of the Cybertruck's front bumper camera view is fascinating, it's worth noting that this innovation may lead to further discussions and considerations within the Tesla community and the automotive industry. Including a front bumper camera on the Cybertruck and possibly the Model 3 Highland aligns with Tesla's continuous advancements in design and technology, marking a significant development for the company and its consumers. It represents a tangible step in solving real-world driving challenges, enhancing the driving experience without drastically changing the vehicle's aesthetic appeal.

![Tesla’s Missing Voice: Why a PR Team Matters More Than Ever [Opinion]](https://www.notateslaapp.com/img/containers/article_images/multiple-models/group_93.jpg/4e1056961f0480c7b9eff43dd2ec288e/group_93.jpg)

_300w.png)