This update is FSD Beta 11.4.8, which is currently being tested internally by Tesla employees.

Tesla Videos

Details

FSD Version

Release Date

FSD Beta Updates

Recent News

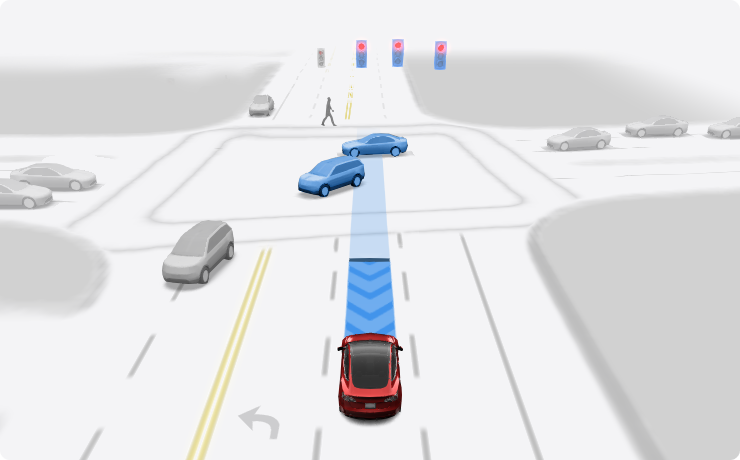

Single Pull to Start Autopilot

You can set Autopilot to start when you pull down the right stalk once, rather than twice. To choose this setting, go to Controls > Autopilot > Autopilot Activation > Single Pull.

Be aware that with Single Pull, when Autopilot Features is set to Autosteer (Beta), you'll bypass Traffic-Aware Cruise Control. Similarly when Autopilot Features is set to Full Self-Driving (Beta), you'll bypass Autosteer (Beta) and Traffic-Aware Cruise Control.

With Single Pull, when you cancel Autosteer (Beta) or Full Self-Driving (Beta), whether you take over the steering or push up the stalk one time - you'll immediately return to manual driving.

As with all Autopilot features, you must continue to pay attention and be ready to take immediate action including canceling the feature and returning to manual driving.

FSD Beta 11.4.8

-Added option to activate Autopilot with a single stalk depression, instead of two, to help simplify activation and disengagement.

-Introduced a new efficient video module to the vehicle detection, semantics, velocity, and attributes networks that allowed for increased performance at lower latency.This was achieved by creating a multi-layered, hierarchical video module that caches intermediate computations to dramatically reduce the amount of compute that happens at any particular time.

-Improved distant crossing object detections by an additional 6%, and improved the precision of vehicle detection by refreshing old datasets with better autolabeling and introducing the new video module.

-Improved the precision of cut-in vehicle detection by 15%, with additional data and the changes to the video architecture that improve performance and latency.

-Reduced vehicle velocity error by 3%, and reduced vehicle acceleration error by 10%, by improving autolabeled datasets, introducing the new video module, and aligning model training and inference more closely.

-Reduced the latency of the vehicle semantics network by 15% with the new video module architecture, at no cost to performance.

-Reduced the error of pedestrian and bicycle rotation by over 8% by leveraging object kinematics more extensively when jointly optimizing pedestrian and bicycle tracks in autolabeled datasets.

-Improved geometric accuracy of Vision Park Assist predictions by 16%, by leveraging 10x more HW4 data, tripling resolution, and increasing overall stability of measurements.

-Improved path blockage lane change accuracy by 10% due to updates to static object detection networks.